Enabling GPU Acceleration

Speed up your Haystack application by engaging the GPU.

The Transformer models used in Haystack are designed to be run on GPU-accelerated hardware. The steps for GPU acceleration setup depend on the environment that you're working in.

Once you have GPU enabled on your machine, you can set the device on which a given model for a component is loaded.

For example, to load a model for the HuggingFaceLocalGenerator, set device="ComponentDevice.from_single(Device.gpu(id=0)) or device = ComponentDevice.from_str("cuda:0") when initializing.

You can find more information on the Device management page.

Enabling the GPU in Linux

-

Ensure that you have a fitting version of NVIDIA CUDA installed. To learn how to install CUDA, see the NVIDIA CUDA Guide for Linux.

-

Run the

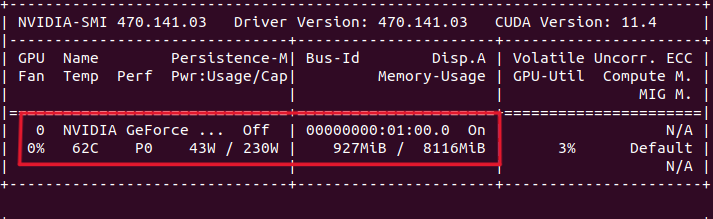

nvidia-smiin the command line to check if the GPU is enabled. If the GPU is enabled, the output shows a list of available GPUs and their memory usage:

Enabling the GPU in Colab

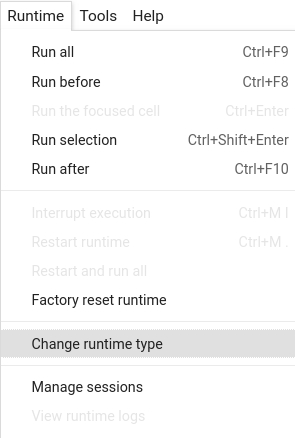

- In your Colab environment, select Runtime>Change Runtime type.

- Choose Hardware accelerator>GPU.

- To check if the GPU is enabled, run:

%%bash

nvidia-smi

The output should show the GPUs available and their usage.