Agent

An agent uses a large language model to generate accurate responses to complex queries. When initializing the agent, you give it tools, which can be pipelines or nodes. The agent then uses these tools iteratively to arrive at the best answer.

It's useful for scenarios where arriving at a correct answer takes a couple of iterations and collecting more knowledge first.

What's the Agent For?

You can use the agent to build apps that use large language models (LLMs) in a loop and answer questions much more complex than what you can achieve with extractive or generative QA. The agent uses a large language model combined with the tools you give to it. The Haystack agent can use pipelines and components as tools.

Agents are useful in multihop question answering scenarios, where the agent must use a combination of multiple pieces of information from different sources to arrive at an answer.

When given a query, the agent breaks it down into substeps and chooses the right tool to start with. Once it receives the output from the tool, it can use it as input for another tool. It then goes on like this until it reaches the best answer.

Conversational Agent

A conversational agent is an extension of an agent with a memory added on top, with the possibility to manage a set of tools. This means that you can have a continuous natural chat with the app, as it will keep track of both your inquiries and its own answers, enabling a human-like conversation. To use the ConversationalAgent, simply choose the LLM you want to work with and initialize it with a PromptNode.

You can add various tools to your chat application using thetools parameter. If you don't provide any, the ConversationalAgent uses the conversational-agent-without-tools prompt by default.

Tutorial

To start building with Conversational Agent, have a look at our Building a Conversational Chat App tutorial.

How It Works

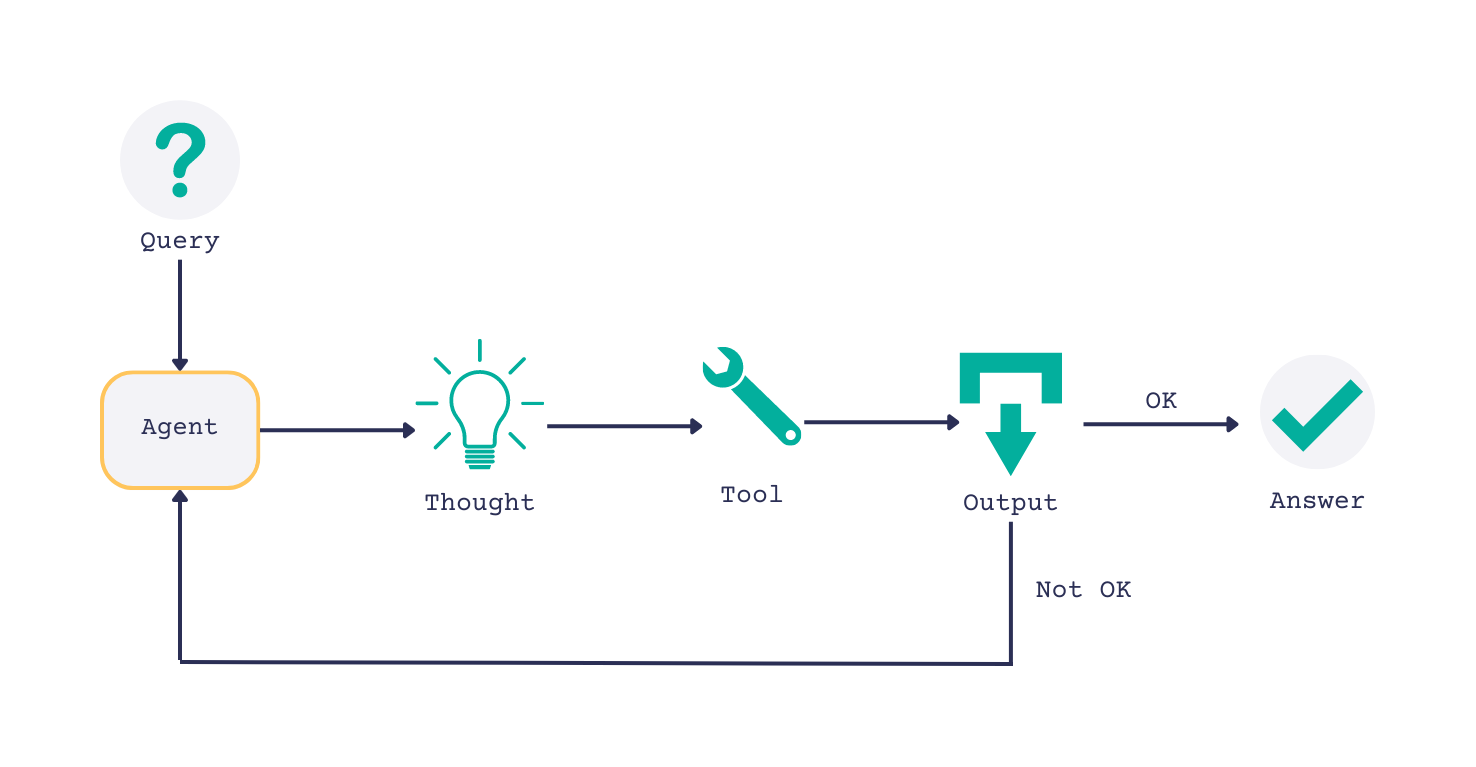

The agent can act iteratively, which means it uses the tools many times to refine its response. This makes it a perfect tool for handling complex queries that require multiple iterations.

Here's how it can work:

- The agent gets your query.

- The agent generates a thought.

The thought is the first part of the prompt. Its goal is to break the query down into smaller steps. - Based on the thought, the agent chooses the tool to use and generates a text input for that tool.

- Based on the output the agent gets from the tool, it either stops and returns the answer or repeats the process from step 2.

- The agent iterates over the steps as many times as it needs to generate an accurate answer.

Implementation

You initialize the agent with a PromptNode, specifying the PromptModel you want to use. We recommend using Open AI's gpt-3.5-turbo model through Open AI API.

The agent uses the PromptNode with the default zero-shot-react PromptTemplate. This is a template we wrote for the agent. You can modify it or create a new template based on it, but it should follow the same format.

PromptTemplates

You initialize the agent with a PromptNode, whose default PromptTemplate is zero-shot-react.

Zero-shot-react PromptTemplate

PromptTemplate(

name="zero-shot-react",

prompt_text="You are a helpful and knowledgeable agent. To achieve your goal of answering complex questions "

"correctly, you have access to the following tools:\n\n"

"{tool_names_with_descriptions}\n\n"

"To answer questions, you'll need to go through multiple steps involving step-by-step thinking and "

"selecting appropriate tools and their inputs; tools will respond with observations. When you are ready "

"for a final answer, respond with the `Final Answer:`\n\n"

"Use the following format:\n\n"

"Question: the question to be answered\n"

"Thought: Reason if you have the final answer. If yes, answer the question. If not, find out the missing information needed to answer it.\n"

"Tool: pick one of {tool_names} \n"

"Tool Input: the input for the tool\n"

"Observation: the tool will respond with the result\n"

"...\n"

"Final Answer: the final answer to the question, make it short (1-5 words)\n\n"

"Thought, Tool, Tool Input, and Observation steps can be repeated multiple times, but sometimes we can find an answer in the first pass\n"

"---\n\n"

"Question: {query}\n"

"Thought: Let's think step-by-step, I first need to ",

),

You can modify this template, keeping the same format. Here are some considerations:

- You can change the initial instructions:

You are a helpful and knowledgeable agent. To achieve your goal of answering complex questions correctly, you have access to the following tools:and theTo answer questions, you'llpart. You can try experimenting with different text to see if it changes the agent's performance. - You can change the

Tool,Tool input, andFinal answersections. If you do that, make sure you update the regular expressions in thetool_patternandfinal_answer_patternparameters respectively. You can set these parameters when initializing the agent. The agent uses them to extract the tool name and input or the final answer from the text its PromptNode generates. - You can change the

Observationpart. If you do that, make sure you update thestop_wordsparameter of the PromptNode used with the agent to the value you replacedObservationwith. - To make bigger changes to the template, create your own

Agentclass and make it inherit from Haystack Agent. - Include a

{transcript}parameter at the end of the prompt for ReAct agents to avoid getting stuck in a loop of thoughts and observations. The transcript represents the internal monologue of the Agent and is stored internally.

For guidance on writing efficient prompts, see Prompt Engineering Guidelines.

The following PromptTemplates are specifically applicable for the Conversational Agent:

conversational-agent

Initialises conversational agent with memory and tools.conversational-agent-without-tools

Initialises conversational agent with memory but without tools.conversational-summary

Condenses and shortens chat transcript without losing important information.

Conversational Agent Memory

By default, the ConversationalAgent stores the full history of the conversation in a ConversationMemory.

However, if the conversation gets too long, you can choose to define a ConversationSummaryMemory to save space as well as LLM tokens. The summary will contain a brief overview of what has already been discussed, preserving the most important information, and will update as the conversation progresses.

To use the ConversationSummaryMemory, initialize it by defining a PromptNode for generating summaries. You can either reuse the PromptNode from your ConversationalAgent, or define a new one with another LLM.

Tools

You can give the agent the tools you want it to use. Choosing the right tools is crucial to ensure the Agent can find the best answer.

A tool can be a Haystack pipeline or a pipeline node. Haystack 1.15 adds a couple of new components: SearchEngine, WebRetriever, and TopPSampler you can also use as tools.

The Agent can use the tools multiple times until it finds the correct answer. You can configure the number of times the Agent uses the tools with the max_steps parameter.

To prevent the agent from using the tools infinitely, the number of iterations is limited to eight by default. You can set the max_steps parameter to change that in every run of the agent individually or when initializing the agent.

When you define a tool, you must describe it to the agent so that it knows when to use it. The agent uses this description to decide when to use the tool. For example, descriptions, see Example.

Agent tools must return meaningful textual results so that the Agent can reason about the next steps. If a tool returns

None, it breaks the Agent iterations. When you create a tool, make sure it returns text as a result.

You can find the overview of the tools ready for your Agent to use below.

Haystack Pipelines

You can use the Agent with the following ready-made pipelines:

- WebQAPipeline

- ExtractiveQAPipeline

- DocumentSearchPipeline

- GenerativeQAPipeline

- SearchSummarizationPipeline

- FAQPipeline

- TranslationWrapperPipeline

- QuestionGenerationPipeline

Pipelines are best if you want the Agent to use a specific set of files to arrive at the answer and you don't need it to access the internet.

Pipeline Nodes

You can use the Agent with pipeline nodes that expect a single text query as input, for example:

- PromptNode

- QueryClassifier

- Retriever, including the new WebRetriever

- Translator

You can add multiple nodes as Agent tools or you can combine them with pipelines.

Web Tools

If you need the Agent to access the internet to find the best answer, you can add WebRetriever or SearchEngine to its tools.

WebRetriever

Uses the SearchEngine under the hood to fetch the results from the internet. It can fetch whole web pages or just page snippets. It can then turn the results into Haystack documents and store them in a DocumentStore to save some resources.

You can use it together with a PreProcessor to configure how you want the Documents to be processed. You can also configure the top_p parameter, which ensures you get a couple of the best results. For more information, see Retrieval from the Web.

SearchEngine

Finds the answers on the internet using page snippets, not the whole pages. You can choose the search engine provider you want to use, for example, SerperDev, SerpAPI or Bing API.

SearchEngine fetches all the answers it thinks match the query, so if you want to filter the results, you must use it in combination with TopPSampler. This way, you can configure the top_p parameter to be used when fetching answers. For more information, see SearchEngine and TopPSampler.

Model

When initializing the agent, you must specify the PromptModel. We recommend using Open AI's gpt-3.5-turbo model. You must have an API key from an active Open AI account to use this model.

Usage

You initialize the agent programmatically. It requires a couple of steps:

- Configure a PromptNode you'll initialize the agent with:

- Specify the

model_name_or_path,api_keyandstop_wordsfor the PromptNode. - If you modified the default PromptTemplate for the PromptNode:

- If you changed the

ToolorTool Inputsections of the template, adjust the regular expression in thetool_patternparameter. You can do it when initializing the agent. - If you changed the

Final Answer:section of the template, update the regular expression in thefinal_answer_patternparameter. You can do it when initializing the agent. - If you modified the

Observationsection of the template, update the stop word in the agent's PromptNode.

- If you changed the

- If you're using the default PromptTemplate, set

Observationas the stop word for the PromptNode.prompt_node = PromptNode(model_name_or_path="gpt-3.5-turbo-instruct", api_key=api_key, stop_words=["Observation:"] )

- Specify the

- Specify the tools for the agent:

- Give each tool a short name. Use only letters (a-z, A-Z), digits (0-9), and underscores (_).

- Add a description for each tool. The agent uses the description to decide when to use the tool. The description should explain what the tool is useful for and when it's useful. The more specific the instruction, the better.

search_tool = Tool(name="USA_Presidents_QA", description="useful for when you need to answer questions related to the presidents of the USA.", pipeline_or_node=presidents_qa, output_variable="answers" )

- Initialize the agent with the PromptNode, adding the tools you want it to use.

agent = Agent(prompt_node=prompt_node, tools_manager=ToolsManager([search_tool])) - Run the agent with a query using

agent.run().

Examples

Agent

This agent uses a PromptNode with the gpt-3.5-turbo model. As a tool, it has the WebQAPipeline that can check the web for answers.

import os

from haystack.agents import Agent, Tool

from haystack.agents.base import ToolsManager

from haystack.nodes import PromptNode, PromptTemplate

from haystack.nodes.retriever.web import WebRetriever

from haystack.pipelines import WebQAPipeline

search_key = os.environ.get("SERPERDEV_API_KEY")

if not search_key:

raise ValueError("Please set the SERPERDEV_API_KEY environment variable")

openai_key = os.environ.get("OPENAI_API_KEY")

if not openai_key:

raise ValueError("Please set the OPENAI_API_KEY environment variable")

pn = PromptNode(

"gpt-3.5-turbo",

api_key=openai_key,

max_length=256,

default_prompt_template="question-answering-with-document-scores",

)

web_retriever = WebRetriever(api_key=search_key)

pipeline = WebQAPipeline(retriever=web_retriever, prompt_node=pn)

few_shot_prompt = """

You are a helpful and knowledgeable agent. To achieve your goal of answering complex questions correctly, you have access to the following tools:

Search: useful for when you need to Google questions. You should ask targeted questions, for example, Who is Anthony Dirrell's brother?

To answer questions, you'll need to go through multiple steps involving step-by-step thinking and selecting appropriate tools and their inputs; tools will respond with observations. When you are ready for a final answer, respond with the `Final Answer:`

Examples:

##

Question: Anthony Dirrell is the brother of which super middleweight title holder?

Thought: Let's think step by step. To answer this question, we first need to know who Anthony Dirrell is.

Tool: Search

Tool Input: Who is Anthony Dirrell?

Observation: Boxer

Thought: We've learned Anthony Dirrell is a Boxer. Now, we need to find out who his brother is.

Tool: Search

Tool Input: Who is Anthony Dirrell brother?

Observation: Andre Dirrell

Thought: We've learned Andre Dirrell is Anthony Dirrell's brother. Now, we need to find out what title Andre Dirrell holds.

Tool: Search

Tool Input: What is the Andre Dirrell title?

Observation: super middleweight

Thought: We've learned Andre Dirrell title is super middleweight. Now, we can answer the question.

Final Answer: Andre Dirrell

##

Question: What year was the party of the winner of the 1971 San Francisco mayoral election founded?

Thought: Let's think step by step. To answer this question, we first need to know who won the 1971 San Francisco mayoral election.

Tool: Search

Tool Input: Who won the 1971 San Francisco mayoral election?

Observation: Joseph Alioto

Thought: We've learned Joseph Alioto won the 1971 San Francisco mayoral election. Now, we need to find out what party he belongs to.

Tool: Search

Tool Input: What party does Joseph Alioto belong to?

Observation: Democratic Party

Thought: We've learned Democratic Party is the party of Joseph Alioto. Now, we need to find out when the Democratic Party was founded.

Tool: Search

Tool Input: When was the Democratic Party founded?

Observation: 1828

Thought: We've learned the Democratic Party was founded in 1828. Now, we can answer the question.

Final Answer: 1828

##

Question: {query}

Thought:

{transcript}

"""

few_shot_agent_template = PromptTemplate(few_shot_prompt)

prompt_node = PromptNode(

"gpt-3.5-turbo", api_key=os.environ.get("OPENAI_API_KEY"), max_length=512, stop_words=["Observation:"]

)

web_qa_tool = Tool(

name="Search",

pipeline_or_node=pipeline,

description="useful for when you need to Google questions.",

output_variable="results",

)

agent = Agent(

prompt_node=prompt_node, prompt_template=few_shot_agent_template, tools_manager=ToolsManager([web_qa_tool])

)

hotpot_questions = [

"What year was the father of the Princes in the Tower born?",

"Name the movie in which the daughter of Noel Harrison plays Violet Trefusis.",

"Where was the actress who played the niece in the Priest film born?",

"Which author is English: John Braine or Studs Terkel?",

]

for question in hotpot_questions:

result = agent.run(query=question)

print(f"\n{result}")

Here's a transcript for the first question of the example above:

Agent custom-at-query-time started with {'query': 'What year was the father of the Princes in the Tower born?', 'params': None}

Let's think step by step. To answer this question, we first need to know who the father of the Princes in the Tower is.

Tool: Search

Tool Input: Who is the father of the Princes in the Tower?

Observation: Edward IV.

Thought: We've learned Edward IV is the father of the Princes in the Tower. Now, we need to find out when he was born.

Tool: Search

Tool Input: When was Edward IV born?

Observation: April 28, 1442.

Thought: We've learned Edward IV was born on April 28, 1442. Now, we can answer the question.

Final Answer: 1442

Conversational Agent

This simple Conversational Agent uses only the gpt-3.5-turbo model and can immediately start answering your queries using the model capabilities.

from haystack.agents.conversational import ConversationalAgent

from haystack.nodes import PromptNode

# Initialize your PromptNode

pn = PromptNode("gpt-3.5-turbo", api_key=os.environ.get("OPENAI_API_KEY"), max_length=256)

agent = ConversationalAgent(pn)

# Try it out

while True:

user_input = input("Human (type 'exit' or 'quit' to quit, 'memory' for agent's memory): ")

if user_input.lower() == "exit" or user_input.lower() == "quit":

break

if user_input.lower() == "memory":

print("\nMemory:\n", agent.memory.load())

else:

assistant_response = agent.run(user_input)

print("\nAssistant:", assistant_response)

Updated about 1 year ago