QueryClassifier

QueryClassifier routes your queries to the branches of your pipeline that are best suited to handling them. You can use it to classify keyword-based queries and questions to achieve better search results. This page contains all the information you need to use the QueryClassifier in your app.

For example, you can route keyword queries to a less computationally expensive sparse Retriever, while passing questions to a dense Retriever, thus saving you time and GPU resources. The QueryClassifier can also categorize queries by topic and, based on that, route them to the appropriate database.

The QueryClassifier populates the metadata fields of the query with the classification label and can also handle the routing.

| Position in a Pipeline | At the beginning of a query Pipeline |

| Input | Query |

| Output | Query |

| Classes | TransformersQueryClassifier SklearnQueryClassifier |

Usage

You can use the QueryClassifier as a stand-alone node in which case it returns two objects: the query and the name of the output edge. To do so, run:

from haystack.nodes import TransformersQueryClassifier

queries = ["Arya Stark father","Jon Snow UK",

"who is the father of arya stark?","Which country was jon snow filmed in?"]

question_classifier = TransformersQueryClassifier(model_name_or_path="shahrukhx01/bert-mini-finetune-question-detection")

# Or Sklearn based:

for query in queries:

result = question_classifier.run(query=query)

if result[1] == "output_1":

category = "question"

else:

category = "keywords"

print(f"Query: {query}, raw_output: {result}, class: {category}")

# Returns:

# Query: Arya Stark father, raw_output: ({'query': 'Arya Stark father'}, 'output_2'), class: keywords

# Query: Jon Snow UK, raw_output: ({'query': 'Jon Snow UK'}, 'output_2'), class: keywords

# Query: who is the father of arya stark?, raw_output: ({'query': 'who is the father of arya stark?'}, 'output_1'), class: question

# Query: Which country was jon snow filmed in?, raw_output: ({'query': 'Which country was jon snow filmed in?'}, 'output_1'), class: question

To use the QueryClassifier within a pipeline as a decision node, run:

from haystack import Pipeline

from haystack.nodes import TransformersQueryClassifier

from haystack.utils import print_answers

query_classifier = TransformersQueryClassifier(model_name_or_path="shahrukhx01/bert-mini-finetune-question-detection")

pipe = Pipeline()

pipe.add_node(component=query_classifier, name="QueryClassifier", inputs=["Query"])

pipe.add_node(component=dpr_retriever, name="DensePassageRetriever", inputs=["QueryClassifier.output_1"])

pipe.add_node(component=bm25_retriever, name="BM25Retriever", inputs=["QueryClassifier.output_2"])

# Pass a question -> run DPR

res_1 = pipe.run(query="Who is the father of Arya Stark?")

# Pass keywords -> run the BM25Retriever

res_2 = pipe.run(query="arya stark father")

Here, the query is processed by only one branch of the pipeline, depending on the output of the QueryClassifier.

Classifying Keyword, Question, and Statement Queries

Keyword

Keyword queries don't have sentence structure. They consist of keywords and the order of words does not matter. For example:- arya stark father

- jon snow country

- arya stark younger brothers

Question

In such queries, users ask a question in a complete, grammatical sentence. A QueryClassifier can classify a query regardless of whether it ends with a question mark or not. Examples of questions:- who is the father of arya stark?

- which country was jon snow filmed in

- who are the younger brothers of arya stark?

Statement

This type of query is a declarative sentence, such as:- Arya stark was a daughter of a lord.

- Show countries that Jon snow was filmed in.

- List all brothers of Arya.

One recommended setup would be to route both questions and statements to a DensePassageRetriever, which is better accustomed to these types, while routing keyword queries to a BM25Retriever to save GPU resources.

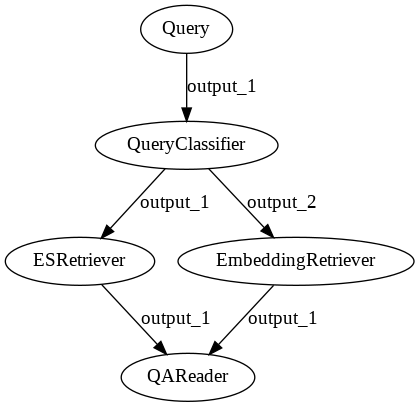

You can see a code example of this configuration in Usage. This pipeline has a TransformersQueryClassifier that routes questions and statements to the node's output_1 and keyword queries to output_2. The ESRetriever takes its input from QueryClassifier.output_1 and the EmbeddingRetriever from QueryClassifier.output_2.

A pipeline where the QueryClassifier routes queries to either a BM25Retriever or an EmbeddingRetriever.

An alternative setup is to route questions to a question answering branch and keywords to a document search branch:

haystack.pipeline import TransformersQueryClassifier, Pipeline

from haystack.utils import print_answers

query_classifier = TransformersQueryClassifier(model_name_or_path="shahrukhx01/question-vs-statement-classifier")

pipe = Pipeline()

pipe.add_node(component=query_classifier, name="QueryClassifier", inputs=["Query"])

pipe.add_node(component=dpr_retriever, name="DPRRetriever", inputs=["QueryClassifier.output_1"])

pipe.add_node(component=bm25_retriever, name="BM25", inputs=["QueryClassifier.output_2"])

pipe.add_node(component=reader, name="QAReader", inputs=["DPRRetriever"])

# Pass a question -> run DPR + QA -> return answers

res_1 = pipe.run(query="Who is the father of Arya Stark?")

# Pass keywords -> run only BM25Retriever -> return Documents

res_2 = pipe.run(query="arya stark father")

Models

To perform this classification of keywords, questions, and statements, you can use either the TransformersQueryClassifier or SkLearnQueryClassifier. The TransformersQueryClassifier is more accurate than the SkLearnQueryClassifier as it is sensitive to the syntax of a sentence. However, it requires more memory and a GPU to run quickly. You can mitigate those downsides by choosing a smaller transformer model. The default model used in the TransformerQueryClassifier is shahrukhx01/bert-mini-finetune-question-detection. We trained this model using a mini BERT architecture of about 50 MB in size, which allows relatively fast inference on the CPU.

bert-mini-finetune-question-detection

A model trained on the mini BERT architecture that distinguishes between questions/statements, and keywords. To initialize, run:TransformersQueryClassifier(model_name_or_path="shahrukhx01/bert-mini-finetune-question-detection")

- Output 1: Question/Statement

- Output 2: Keyword

To find out more about how it was trained and how well it performed, see its model card.

question-vs-statement-classifier

A model trained on the mini BERT architecture that distinguishes between questions and statements. To initialize, run:TransformersQueryClassifier(model_name_or_path="shahrukhx01/question-vs-statement-classifier")

- Output 1: Question

- Output 2: Statement

To find out more about how it was trained and how well it performed, see its model card.

gradboost_query_classifier

A gradient boosting classifier that distinguishes between questions/statements and keywords. To initialize, run:SklearnQueryClassifier(

query_classifier = "https://ext-models-haystack.s3.eu-central-1.amazonaws.com/gradboost_query_classifier/model.pickle",

query_vectorizer = "https://ext-models-haystack.s3.eu-central-1.amazonaws.com/gradboost_query_classifier/vectorizer.pickle")

- Output 1: Question

- Output 2: Statement

To find out more about how it was trained and how well it performed, see its Readme.

gradboost_query_classifier_statements

A gradient boosting classifier that distinguishes between questions and statements. To initialize, run:SklearnQueryClassifier(

query_classifier = "https://ext-models-haystack.s3.eu-central-1.amazonaws.com/gradboost_query_classifier_statements/model.pickle",

query_vectorizer = "https://ext-models-haystack.s3.eu-central-1.amazonaws.com/gradboost_query_classifier_statements/vectorizer.pickle")

- Output 1: Question

- Output 2: Statement

To find out more about how it was trained and how well it performed, see its Readme.

Zero-Shot Classification

While most classification models, like the ones in the examples above, have a predefined set of labels, zero-shot classification models can perform classification with any set of labels you define. To initialize a zero-shot QueryClassifier, run:

# In zero-shot-classification, you can choose the labels

labels = ["music", "cinema"]

query_classifier = TransformersQueryClassifier(

model_name_or_path="typeform/distilbert-base-uncased-mnli",

use_gpu=True,

task="zero-shot-classification",

labels=labels,

)

Here the labels variable defines which labels you want the QueryClassifier to use. The model you pick should be a natural language inference model such as those trained on the Multi-Genre Natural Language Inference (MultiNLI) dataset.

To perform the classification, run:

queries = [

"In which films does John Travolta appear?", # query about cinema

"What is the Rolling Stones first album?", # query about music

"Who was Sergio Leone?", # query about cinema

]

import pandas as pd

# This object will contain results of the classification.

# The "Query" key will contain a list of queries.

# The "Output Branch" key will contain which branches the queries was routed through.

# The "Class" key will contain the classification label of each query.

query_classification_results = {"Query": [], "Output Branch": [], "Class": []}

for query in queries:

result = query_classifier.run(query=query)

query_classification_results["Query"].append(query)

query_classification_results["Output Branch"].append(result[1])

query_classification_results["Class"].append("music" if result[1] == "output_1" else "cinema")

pd.DataFrame.from_dict(query_classification_results)

Updated over 1 year ago