DocumentClassifier

This Node classifies Documents by attaching metadata to them. You can use it in a pipeline or on its own. This page explains how to use it in both use cases.

The DocumentClassifier node is a transformer-based classification model used to create predictions that are attached to Documents as metadata. For example, by using a sentiment model, you can label each document as being either positive or negative in sentiment. Thanks to tight integration with the Hugging Face model hub, you can supply a model name to load it.

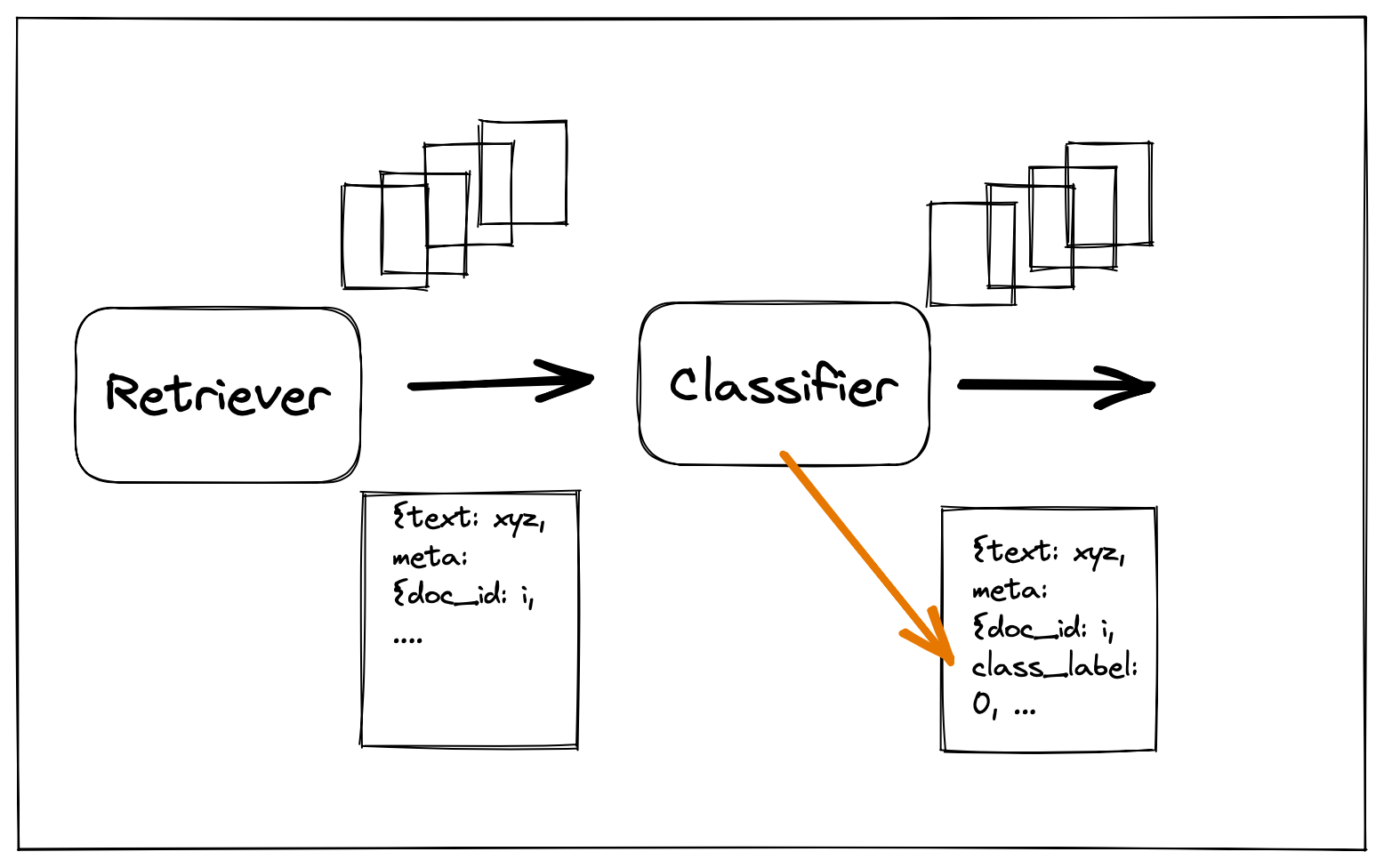

A Document Classifier adds a classification label as metadata to Documents.

Note

The Document Classifier is different from the Query Classifier. While the Query Classifier categorizes incoming queries in order to route them to different parts of the pipeline, the Document Classifier is used to create classification labels that can be attached to retrieved documents as metadata. The QueryClassifier is a decision Node while the DocumentClassifier is not.

Usage

To initialize the Node:

from haystack.nodes import TransformersDocumentClassifier

doc_classifier_model = 'bhadresh-savani/distilbert-base-uncased-emotion'

doc_classifier = TransformersDocumentClassifier(model_name_or_path=doc_classifier_model)

Alternatively, if you can't find a classification model that has been pre-trained for your exact classification task, you can use zero-shot classification with a custom list of labels and a Natural language Inference (NLI) model as follows:

doc_classifier_model = 'cross-encoder/nli-distilroberta-base'

doc_classifier = TransformersDocumentClassifier(

model_name_or_path=doc_classifier_model,

task="zero-shot-classification",

labels=["negative", "positive"]

To use it in a Pipeline, run:

pipeline = Pipeline()

pipeline.add_node(component=retriever, name="Retriever", inputs=["Query"])

pipeline.add_node(component=doc_classifier, name='DocClassifier', inputs=['Retriever'])

It can also be run in isolation:

documents = doc_classifier.predict(

documents = [doc1, doc2, doc3, ...]

):

Updated almost 2 years ago